We at Novature Tech, classify Non-Functional Testing into 2 major categories namely Business Facing Tests and Technology Facing Tests.

Business Facing Tests are Testing that are carried out to ensure the End user experience on the Application under Test. Some of the Testing that is carried out under Business Facing Tests are

Scenario Testing is carried out by exaggeration or mimic of real time scenarios. It requires clear co-ordination with Business users. Business users can help define plausible scenarios and workflows that can mimic end user behavior. Real-life domain knowledge is critical to creating accurate scenarios. We would test the system from end to end perspective but not necessarily as a black box.We use the technique, “soap opera testing,”. The idea here is to take a scenario that is based on real life, exaggerate it in a manner like the way TV soap operas exaggerate behavior and emotions and compress it into a quick sequence of events. We raise questions like “What’s the worst thing that can happen, and how did it happen?”

When testing different scenarios, we ensure both the data and the flow are realistic. Find out if the data comes from another system or if it’s input manually. We get a sample if we can by asking the customers to provide data for testing. Real data will flow through the system and can be checked along the way. In large systems, it will behave differently depending on what decisions are made.When testing end-to-end, we make spot checks to make sure the data, status flags, calculations, and so on are behaving as expected. We use flow diagrams and other visual aids to help us understand the functionality and carry out the Testing accordingly.

Exploratory testing (ET) is a sophisticated, thoughtful approach to testing without a script, and it enables to go beyond the obvious variations that have already been tested. Exploratory testing combines learning, test design, and test execution into one test approach. We apply heuristics and techniques in a disciplined way so that the “doing” reveals more implications that just thinking about a problem. As we test, we learn more about the system under test and can use that information to help us design new tests.

Exploratory testing is not a means of evaluating the software through exhaustive testing. It is meant to add another dimension to testing. Exploratory testing uses the tester’s understanding of the system, along with critical thinking, to define focused, experimental “tests” which can be run in short time frames and then fed back into the test planning process.

Exploratory testing is based on:

Several components are typically needed for useful exploratory testing:

Session-Based Testing

Session-based testing combines accountability and exploratory testing. It gives a framework to a tester’s exploratory testing experience so that they can report results in a consistent way. In session-based testing, we create a mission or a charter and then time-box our session so we can focus on what’s important. Too often as testers, we can go off track and end up chasing a bug that might or might not be important to what we are currently testing. Sessions are divided into three kinds of tasks: test design and execution, bug investigation and reporting, and session setup. We measure the time we spend on setup versus actual test execution so that we know where we spend the most time. We can capture results in a consistent manner so that we can report back to the team.

Usability Testing is carried to ensure better user experience. Usability Testing ensure the following items to the users

User Needs and Persona Testing

Let’s look at an online shopping example. We think about who will use the site. Will it be people who have shopped online before, or will it be brand new users who have no idea how to proceed? We’re guessing it will be a mixture of both, as well as others. We Take the time to ask marketing group to get the demographics of the end users. The numbers help us plan your testing. You can also just assume the roles of novice, intermediate, and expert users as you explore the application. Can users figure out what they are supposed to do without instructions? If you have a lot of first-time users, you might need to make the interface very simple. If your application is custom-built for specific types of users, it might need to be “smart” rather than intuitive. Training sessions might be sufficient to get over the initial lack of usability so that the interface can be designed for maximum efficiency and utility.

Navigation

Navigation is another aspect of usability testing. It’s incredibly important to test links and make sure the tabbing order makes sense. If a user has a choice of applications or websites, and has a bad first experience, they likely won’t use your application again. Some of this testing is automatable, but it’s important to test the actual user experience. If you have access to the end users, get them involved in testing the navigation. Pair with a real user, or watch one actually use the application and take notes. When you’re designing a new user interface, consider using focus groups to evaluate different interfaces. You can start with mock-ups and flows drawn on paper, get opinions, and try HTML mock-ups next, to get early feedback.

Check Out the Competition

When evaluating your application for usability, we take consideration about other applications that are similar. How do they accomplish tasks? Do you consider them user-friendly or intuitive? If we can get access to competing software, we take some time to research how those applications work and compare them with your product. We recommend our Customers if Usability Testing is required or not depending about the objective of the Application or product under Test. If you’re producing an internal application to be used by a few users who will be trained in its use, you probably don’t need to invest much in usability testing

Technology facing Tests include validation of configuration, security, performance, memory management, the “ilities” (e.g., reliability, inter operability, and scalability), recovery, and even data conversion.

In an application or product, we must be concerned with qualities such as security, maintainability, interoperability, compatibility, reliability and installability. We call us Ilities and the Testing carried to validate those ilities as ILITY Testing.

Security TestingOK, it doesn’t end in -ility, but we include it in the “ility” bucket because we use technology-facing tests to appraise the security aspects of the product. Security is a top priority for every organization these days. Every organization needs to ensure the confidentiality and integrity of their software. They want to verify concepts such as no repudiation, a guarantee that the message has been sent by the party that claims to have sent it and received by the party that claims to have received it. The application needs to perform the correct authentication, confirming each user’s identity, and authorization, in order to allow the user access only to the services they’re authorized to use. We have a separate Testing Competency Center to carry out Security Testing.

Maintainability TestingWe encourage development teams to develop standards and guidelines that they follow for application code, the test frameworks, and the tests themselves. Teams that develop their own standards, rather than having them set by some other independent team, will be more likely to follow them because

they make sense to them. The kinds of standards we mean include naming conventions for method names or test names. All guidelines should be simple to follow and make maintainability easier

Standards for developing the GUI also make the application more testable and maintainable, because testers know what to expect and don’t need to wonder whether a behavior is right or wrong. It also adds to testability if you are automating tests from the GUI. Simple standards such as, “Use names for all GUI objects rather than defaulting to the computer assigned identifier” or “You cannot have two fields with the same name on a page” help the team achieve a level where the code is maintainable, as are the automated tests that provide coverage for it.

Maintainability is an important factor for automated tests as well. Database maintainability is also important. The database design needs to be flexible and usable. Every iteration might bring tasks to add or remove tables, columns, constraints, or triggers, or to do data conversion. These tasks become a bottleneck if the database design is poor or the database is cluttered with invalid data.

InteroperabilityInteroperability refers to the capability of diverse systems and organizations to work together and share information. Interoperability testing looks at end-to-end functionality between two or more communicating systems. These tests are done in the context of the user—human or a software application — and look at functional behavior.

CompatibilityThe type of project you’re working on dictates how much compatibility testing is required. If you have a web application and your customers are worldwide, you will need to think about all types of browsers and operating systems. If you are delivering a custom enterprise application, you can probably reduce the amount of compatibility testing, because you might be able to dictate which versions are supported. As each new screen is developed as part of a user interface story, it is a good idea to check its operability in all supported browsers. A simple task can be added to the story to test on all browsers.

ReliabilityReliability of software can be referred to as the ability of a system to perform and maintain its functions in routine circumstances as well as unexpected circumstances. The system also must perform and maintain its functions with consistency and repeatability. Reliability analysis answers the question, “How long will it run before it breaks?” Some statistics used to measure reliability are:

Mean time to failure:The average or mean time between initial operation and the first occurrence of a failure or malfunction. In other words, how long can the system run before it fails the first time?

Mean time between failures: A statistical measure of reliability, this is calculated to indicate the anticipated average time between failures.The longer the better. we schedule weeks of reliability testing that tried to run simulations that matched a regular day’s work.

Novature Tech has a Competency Center for Performance Testing. We carry out different types of Performance Testing based on the Client requirements.

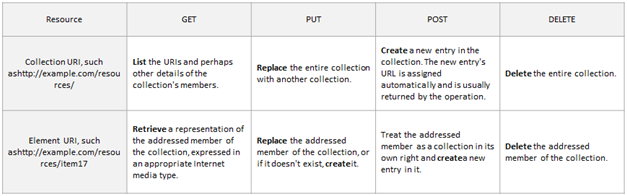

HTTP methods typically used to implement a web service are (REST)

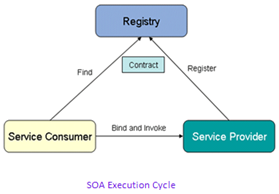

Service-component-level testing

Service-level testing

Integration-level testingLogical Tested components will be grouped as release candidate for the Integration level testing. In this the dependencies for the services would be resolved and no need of using the simulators or stubs. Its the production environment with UAT credentials.

Process/Orchestration-level testing

System-level testingSystem Level testing will form the majority, though not all the User Acceptance Test phase. This test phase will test that the SOA technical solution has delivered the defined business requirements and has met the defined business acceptance criteria. This will re-use the test suites developed in the previous phases with appropriate business data

Security TestingPenetration security testing will be part of the Service Level testing phase. At the end of Service-Level testing, QA conducts Security testing with the help of WC Penetration security testing specialist

© 2024 Novature Tech Pvt Ltd. All Rights Reserved.