Novature Tech has a well-established Center of Excellence for Test Automation. The focus is on consolidation of Knowledge, Best Practices and invest on innovations. They have well proven Test Automation Process, Test Automation methodologies and frameworks.

Test Automation CoE has defined a Test Automation Methodology that provides the overview of the process and procedures to be pursued for every Test Automation project

Automation methodology depicts a process that helps to approach test design and execution. The test process forms a guideline for all test automation projects for effective and efficient implementation. This structured approach also helps to steer the testing team away from the common test program mistakes mentioned below:

This process supports the detailed and inter-related activities that are required to decide on an automated testing tool, process of how to introduce and utilize an automated test tool.

Automation testing process is comprised of five distinct phases:

Objective: The primary objective of this phase is to assess the nature of the application, client requirements, client expectations and establishing the scope of automation.

Entry Criteria: For the benefits of Automated Testing to be optimally realized, following pre-conditions needs to be fulfilled before the start of automation process.

Activities:The phase will typically involve analyzing automation test requirements from the manual test cases of the application. This involves identifying test cases for automation, prioritizing based on the needs and defining the complexity factor for each test case.

The Automation requirements once clearly defined and documented must be globally understood by the project team. This helps to identify the resource requirements and prepare test plan based on the application complexity. During this phase, the test team identifies:

Ideal Candidates for automation

A feasibility study is required to identify the test cases which can be automated. Tests that require constant human intervention are usually not worth the investment to automate. The following are examples of criteria that can be used to identify tests that are prime candidates for automation:

Creation of Automation Traceability Matrix The major objective of the automation traceability document is to ease the process of modifications in the automation test scripts for all future releases.Hence the exact traceability between the manual test cases, functional components and corresponding functions in the automation test script are created and captured in Automation Traceability Matrix.Creation of Automation Traceability Matrix should be started when the requirements are established and frozen.

Exit Criteria: The exit criteria for this phase are successful identification of test cases for automation and creation of automation traceability matrix.

Objective: The objective of test planning phase is to assist the test team to track the progress of the test process as well as overall project activities throughout the project lifecycle.

Entry Criteria: The test cases identified for automation should be base lined.

Activities: The test planning phase starts with tool selection followed by formulation of test automation approach and finally creation of test plan document which is the outcome of this phase. This document is shared across respective stakeholders for reviews and approvals. Following activities are performed in this phase:

Tool SelectionWith the emerging trends and technologies, a number of automation tools are available in the market. Therefore, identification of an appropriate tool best supporting the application under test gains prominence. Tool evaluation is inevitable for selecting the automation tool that meets the project expectations. It requires prior experience and process to reduce the complexity of the tool selection process and the general concerns include:

Proof of ConceptThe objective of the proof of concept phase is to demonstrate and validate the Test Automation process and approach to be followed for the project. The automation experts formulate an approach that best suits the project requirements and characteristics. The scope of proof of concept is to automate & deliver a couple of test cases on a sample basis. The proof of concept establishes,

The proof of concept gives an early insight to all the stakeholders about the proposed test automation approach for the project, resulting in any corrective actions if required to the test process early in the project lifecycle.

Preparing Test Plan A Test Plan for a project helps to track the progress of the activities. It ensures that all those involved in the project are in sync with regards to scope, responsibilities, schedules and deliverables for the project. The progress of the test process is tracked against the Test Plan, which provides early insights for corrective actions in case of deviations.

The test plan defines:

Test plan appendices include test procedures and its estimated number, naming convention description and test procedure format.

Exit Criteria: This phase concludes with the formulation of the automation approach as well as defining all activities related to project execution in a test plan document.

Objective: The objective of this phase is to address the number of tests to be performed, the final approach (paths, functions), and the test conditions. Test design standards need to be defined and followed.

Entry Criteria: Test plan is the primary input to the test design component.

Activities: Test design phase addresses the following tasks:

Finalize Automation Approach During this phase, the automation approach defined during Proof of Concept stage, will be fine tuned to address

Develop Scripting Standards Automation scripting has gained importance as a development exercise. Scripting standards are a necessity for each project and the respective documentation to be read and understood in the same way by all those involved in the project. Following are the points to be noted while building up scripting standards:

Automation Tool Standards The standards set for automation tools must also be considered while framing the scripting standards for script development.

Naming conventions Standardized naming conventions for functions, variables, and data tables, scripts, and test cases should be maintained and strictly practiced.

Parameterization It is a good practice to parameterize every instance of user input. This allows flexibility of test data and allows usage of the script for variety of input combinations.

Exception handling Exception handling guidelines are important sections in the standards. There can be several issues, which affects the smooth execution of the script like test data failure or an application popup. These guidelines will help the script developers to take an action in such events.

Error Logging Standardized error logging is a must. The execution log is the prime outcome of an automation test execution and a formalized log always makes it easy to analyze the test results after the test suite execution.

Documentation Documentation standards like adding comments and indentation helps the script developers to create uniform scripts. It also offers the new script developers readability and understanding of the code.

Exit Criteria: The phase concludes with the finalization of automation approach and establishing of scripting standards.

Objective: The objective of this phase is to create test scripts based on the identified business flows/ manual test cases.

Entry Criteria: The manual test cases, test data and scripting standards form the input for this phase.

Activities: In this phase reusable functions as well as application specific functions are created. These functions along with the data and the scripting standards in place, facilitates test script development. All these are encapsulated in a test suite. The test suite can be invoked by executing a single script file normally called as a driver script. Following are the prime activities of this phase:

Converting Requirements into Automated Scripts Requirements in the form of manual test cases which are base lined and form for an ideal candidate for automation are converted to automated scripts.

Creating Reusable / Module Specific Functions Reusability overcomes redundancy, and this has been proved in the organization of functions. There are two kinds of reusable functions,

Common functions: All the generic functions and Wrappers have been designed in such a way that they can be reused not only in the Project but also in case testing of other modules of the application is desired at a later stage. For example test log reporting and error handling functions do not change with applications and can be reused.

Business functions: All the business functions are designed such that they are reusable across product specific main scripts. For example script for login functionality is very specific to an application.

Test Script Generation A test script consists of data input steps, navigation flow, verification checks and reusable components in a sequence as per the required flow mentioned in the test case. The test script should follow the proper format, documentation standards as defined in the test plan. A test script once ready is executed to ensure it works seamlessly. In case of any errors the test script will be debugged till it executes without errors. A test suite comprises of a set of such test scripts, which are called in a defined sequence by the driver scripts. This driver script includes set of instructions to initialize the environmental parameters and settings required for test suite execution.

Dry Runs Post scripting phase the scripts are executed individually as well as in a batch mode multiple times in the test environment. The objective of the dry run is to simulate the actual execution during releases to ensure the scripts are working seamlessly and the test results are being reported accurately. In case the scripts were designed to run on multiple environments with different operating system combinations then trial runs are also conducted on all such environments to ensure the scripts are correctly reporting the test results. The exit criterion for the dry run is seamless execution of the automation scripts as well as correct reporting of test results. Dry runs also help identifying the inconsistencies in the response time of the application under different conditions thus reducing script maintenance during actual execution for releases.

Exit Criteria: This phase concludes with successful creation of test suite and execution of the test suite to ensure the scripts are working seamlessly and the test results are being reported accurately.

Objective: The objective of this phase is to test the application using automated test suite and reporting of test results.

Entry Criteria:The application build is available with all functionalities to be tested for seamless execution of the automation test suite.

Activities: Test automation yields benefits in the test execution phase, by running the suite unattended in the test environment, thus freeing testers to do other activities instead of manual test execution. Test automation also benefits business users for execution of tests which are of repetitive nature where in business users need to undergo training for test suite execution.

Following are the activities performed in this phase:

Execution The advantage of automation is that, once the test suite is automated, the suite can be invoked at any point of time and can be repetitively executed number of times in the test environment. Even in case of small functional changes in the application under test, the test suite can be executed overnight. Post execution the results are analyzed for failures. The defects in the test results are reported to the project management and are further tracked to closure.

Reporting During the suite execution all the steps for navigation, data inputs and verification checks will be logged in the test results. The test results should be elaborative enough for easy interpretation and analysis. The failed steps in the tests are analyzed for defects and reported to the project management. The test results can be outputted in text, excel, PDF or html formats as per the project needs.

Automation Metrics collection Metrics are collected to review the overall automation process in terms of effectiveness and efficiency. Metrics also effective in measuring the cost benefits of automation over manual testing. Following metrics can be collected in the appropriate phases:

Exit Criteria: This phase concludes with the appropriate test results & reports generation and collection of test metrics at predefined points.

Important factors to be considered before Automation are:

Scope – It is not practical to try to automate everything, nor is there the time available generally. Pick very carefully the functions/areas of the application that are to be automated.

Preparation Time frame – The preparation time for automated test scripts has to be taken into account. In general, the preparation time for automated scripts can be up to 2/3 times longer than for manual testing. In reality, chances are that initially the tool will actually increase the testing scope. It is therefore very important to manage expectations. An automated testing tool does not replace manual testing, nor does it replace the test engineer. Initially, the test effort will increase, but when automation is done correctly it will decrease on subsequent releases.

Return on Investment – Because the preparation time for test automation is so long, I have heard it stated that the benefit of the test automation only begins to occur after approximately the third time the tests have been run.

When is the benefit to be gained? Choose your objectives wisely, and seriously think about when & where the benefit is to be gained. If your application is significantly changing regularly, forget about test automation – you will spend so much time updating your scripts that you will not reap many benefits. [However, if only disparate sections of the application are changing or the changes are minor – or if there is a specific section that is not changing, you may still be able to successfully utilize automated tests]. Bear in mind that you may only ever be able to do a complete automated test run when your application is almost ready for release – i.e. nearly fully tested!! If your application is very buggy, then the likelihood is that you will not be able to run a complete suite of automated tests – due to the failing functions encountered.

The Degree of Change – The best use of test automation is for regression testing, whereby you use automated tests to ensure that pre-existing functions (e.g. functions from version 1.0 – i.e. not new functions in this release) are unaffected by any changes introduced in version 1.1.

Test Integrity – how do you know (measure) whether a test passed or failed? Just because the tool returns a ‘pass’ does not necessarily mean that the test itself passed. For example, just because no error message appears does not mean that the next step in the script successfully completed. This needs to be taken into account when specifying test script fail/pass criteria.

Test Independence – Test independence must be built in so that a failure in the first test case won’t cause a domino effect and either prevents, or cause to fail, the rest of the test scripts in that test suite. However, in practice this is very difficult to achieve.

Debugging or “testing” of the actual test scripts themselves – time must be allowed for this, and to prove the integrity of the tests themselves.

Record & Playback – DO NOT RELY on record & playback as the SOLE means to generate a script. The idea is great. You execute the test manually while the test tool sits in the background and remembers what you do. It then generates a script that you can run to re-execute the test. It’s a great idea – that rarely works (and proves very little).

Maintenance of Scripts – Finally, there is a high maintenance overhead for automated test scripts – they have to be continuously kept up to date, otherwise you will end up abandoning hundreds of hours work because there have been too many changes to an application to make modifying the test script worthwhile. As a result, it is important that the documentation of the test scripts is kept up to date also.

The Framework is an attempt to simplify and speed-up scripting by making use of reusability. The goal is to provide a complete end-to-end automation solution for testing the AUT. As we set out to develop a framework for automating the tests we are keeping the following points in mind

The test framework should be application-independent Although applications are relatively unique, the components that comprise them, in general, are not. Thus, we will focus our automation framework to deal with the common components that make up our unique applications and reuse virtually everything we develop for every application that comes through the automated test process.

The test framework must be easy to expand, maintain and perpetuate The framework we develop should be highly modular and maintainable. Each module developed will be independent of the other modules and will not be affected by any change in other modules. This allows us to expand each module without affecting any other part of the system. The framework will be aimed at being simpler and perpetual. The framework isolates the application under test from the test scripts by providing a set of functions in a shared function library. The test script writers treat these functions as if they were basic commands of the test tool’s programming language. They can thus program the scripts independently of the user interface of the software. Hence the key points of framework are

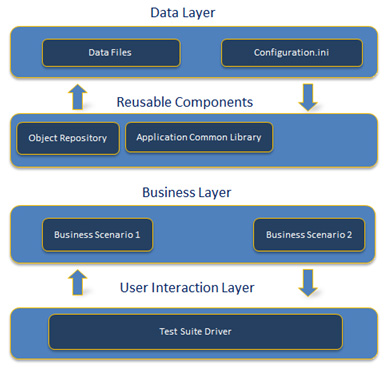

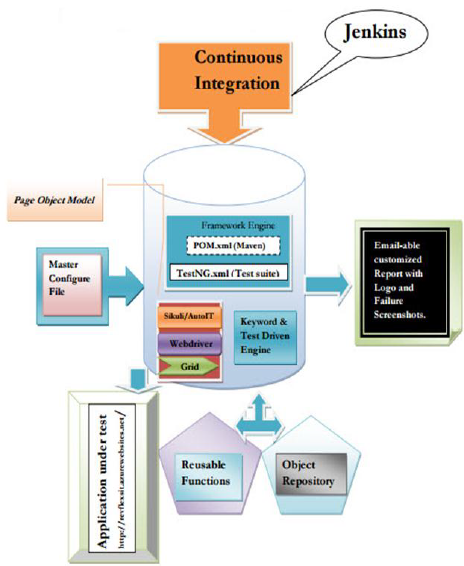

Our automation framework incorporates the features of both Data driven and Keyword driven frameworks. The main components of this framework are

With the expertise on Test Automation and focus on reducing cost for Test Automation, we have developed a ready to use Test Automation Framework. We have developed Automation Frameworks for Testing Web Applications and Testing Mobile Application. We call our Test Automation framework for Web Applications as RAFT- Web. RAFT- Web stands for Re-Usable Framework for Testing Web Applications. RAFT- Web is a ready to use the framework. Please find below the RAFT-Web Architecture. The Key features of RAFT-Web framework are.

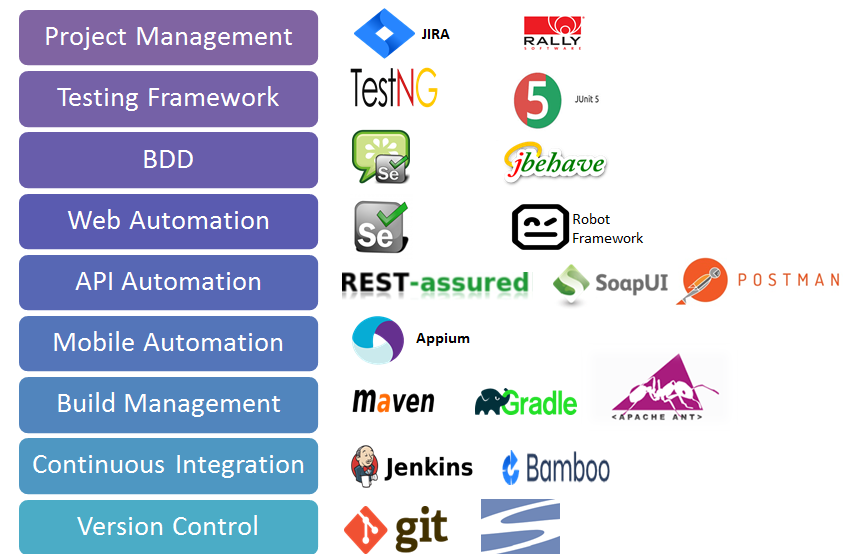

We have expertise on leading Test Automation Tools such as QTP, RFT, Silk Test but our recommendations and focus are for the implementation of Open Source Tools like Selenium to achieve huge Cost with no compromise on the quality of Test Automation Suites.

We help organizations to migrate Test Automation Suites from Licensed tools to open Source using of our Ready to use Framework RAFT-Web

Our Marketplace Tool Expertise

© 2024 Novature Tech Pvt Ltd. All Rights Reserved.