Agile software development describes a set of principles for software development under which requirements and solutions evolve through the collaborative effort of self-organizing cross-functional teams. The key characteristics of Agile Delivery model are

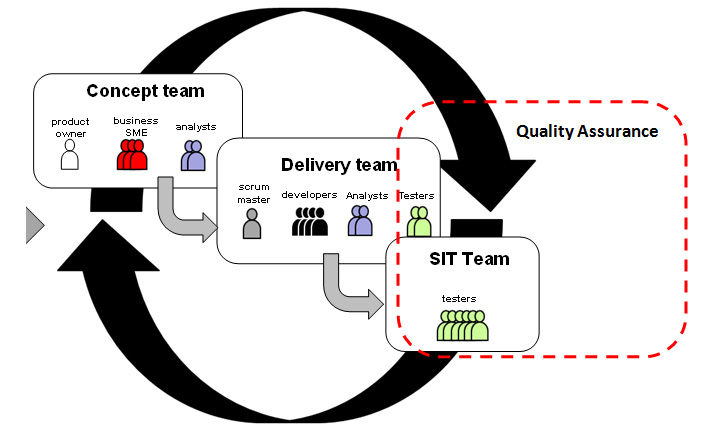

The Agile Delivery teams are broadly classified into 3 major teams namely Concept team, Delivery team and SIT Team. Along with these, there are other teams such as Architecture and PMO team that support all these 3 teams.

The Concept team is responsible to create stories and deliver the stories to the Delivery team. The Concept team works closely with the Business team to prepare the stories.

The stories prepared by Concept team are fed to Delivery team where the stories are consumed and realized. The Delivery team has resources with diversified roles and responsibilities such as Scrum master, Developers, Analysts and Testers. Testers in the delivery team are responsible to validate the User stories that are developed and delivered to them by the Developers. Testers in the delivery team are also responsible to work with Business team to get their acceptance by showing the appropriate evidence.

The validated User stories are then delivered to SIT team. SIT team is responsible to validate that the different components delivered from different delivery teams work together to perform the Business functionality. SIT team is also responsible to validate the Integrated Testing that includes the validation of connection to upstream and downstream systems. The scope of testing for the delivery Testing and SIT Testing are mentioned in the subsequent sections.

Separate teams and bifurcated responsibilities (a producer-consumer model) operating in an incremental-iterative pipeline approach, follow Agile and Scrum principles. Therefore in a nutshell,

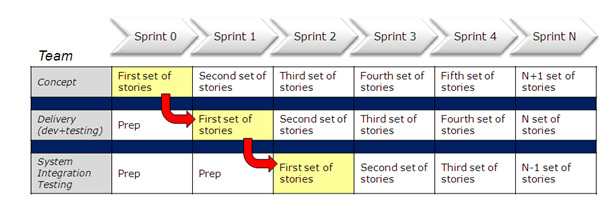

The sequence of stories worked upon by the different teams are shown below –

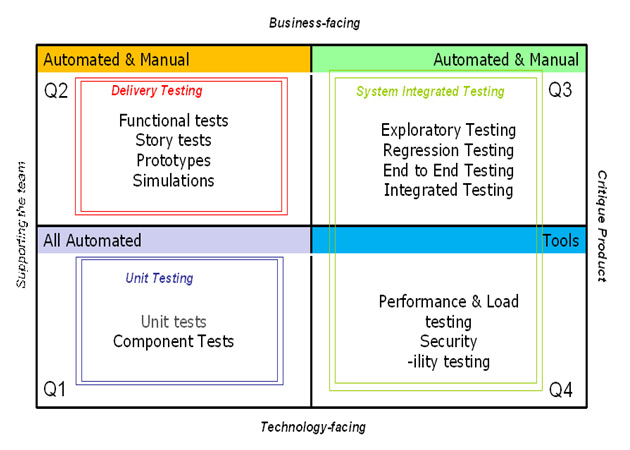

The Agile Testing Quadrants shown below helps Testers and Developers ensure that they have considered all the different types of tests needed to deliver value. The diagram shows how each of the four quadrants reflects the different reasons of testing. On one axis, the matrix is divided into tests that support the team and the test that critique the product. The other axis is divided into Business-facing and Technology-facing tests.

Delivery Testing (Business Facing & Support the team) – Quadrant 2 Testing supports test driven development (TDD). They are part of delivery team and work with Business team to get their acceptance and help to validate and improve the quality of the delivery.

System Integration Testing (Critique Product, Business Facing & Technology Facing ) –an independent Testing team validates the different Functional components work together to deliver the Business solution. SIT also validates the connection to Upstream and Downstream Systems. They also carry out Performance Testing, Security Testing and –ility Testing.

The different types of Testing that is carried out across the Testing Quadrants are:

The quadrants on the left include tests that support the team as it develops the product. This concept of testing to help the programmers is the biggest difference in testing on a traditional project and testing on an Agile project. The testing done in Quadrants 1 and 2 are more requirements specification and design aids than typical thinking of testing. These quadrants represent test driven development (TDD), which is a core Agile development practice. Team that carries out the tests that Support the team is part of the team. They help to validate and improve the quality of the delivery.

The word “critique” isn’t intended in a negative sense. A critique can include both praise and suggestions for improvement. Appraising a software product involves both art and science. Software has to be reviewed in a constructive manner, with the goal of learning how it can be improved. The Testing that critique the product is carried out by the team that is not part of delivery team to overcome the influence of technical or other delivery constraints. The team reviews the product from outside with different focus on Testing.

The lower left quadrant represents test driven development, which is a core Agile development practice. This testing is performed to support the delivery team to improve the quality of the deliverable and the objective is to validate that the technical component or work product works as expected.

Unit Testing is a process in which the smallest testable parts of an application, called units, are individually and independently scrutinized for proper operation. Unit testing involves only those characteristics that are vital to the performance of the unit under test. This encourages developers to modify the source code without immediate concerns about how such changes might affect the functioning of other units or the program as a whole. Once all of the units in a program have been found to be working in the most efficient and error-free manner possible, larger components of the program can be evaluated by means of integration testing.

In Unit Testing, Individual components of the configuration item are tested to verify that the new or modified aspects are functioning properly. Code walkthroughs and inspections are additional quality techniques suggested to confirm the quality of the code. Unit testing is performed before the various components of the configuration item are combined for system integration testing.

A major purpose of Q1 is to test the Test-Driven Development (TDD) or test-driven design. The process of writing tests first helps programmers design their code well. These tests let the programmers confidently write code to deliver a story’s features without worrying about making unintended changes to the system. They can verify that their design and architecture decisions are appropriate. Unit and Component tests are automated and written in the same programming language as the application. Programmer tests are normally part of an automated process that runs with every code check-in, giving the team instant, continual feedback about their internal quality.

The tests in Quadrant support the work of the development team, but at a higher level. The Business-Facing tests, also called as customer-facing tests and customer tests, define external quality and the features that the customers want. Functional Testing team is part of Delivery team and that help the delivery team to improve the quality of the delivery.

Functional Testing is to validate that the user stories delivered meet the acceptance criteria. Functional test will exercise all positive and negative paths within a system, from the point of data entry to the point of exit, as well as reporting. Functional Testing is executed by the Delivery Testing team.

Business-facing examples help the team design the desired product. The Business experts might overlook functionality, or not get it right if it isn’t their field of expertise. The team might simply misunderstand some examples. Even when the programmers write code that makes the Business-facing tests pass, they might not be delivering what the customer really wants. That is where the tests to critique the product in the third and fourth quadrants come into play.

Quadrant Q3 – Regression Testing and Exploratory Testing are positioned in Q3 because those testing focus is to validate the Business aspect of the delivery. This testing team is not part of the delivery team and they review the delivery from outside to overcome the influence of Technical or other programming constraints.

Quadrant Q4 – The types of tests that fall into the fourth quadrant are just as critical to Agile development as to any type of software development. These tests are technology-facing, to be discussed in technical rather than business terms. Technology-facing tests in Quadrant 4 are intended to critique product characteristics such as performance, robustness and security. Performance Testing, compatibility Testing and security Testing are positioned in Q4. The Testing team is not part of the Delivery team but the critique the product from outside the team.

Testing positioned in Q3 and Q4 are carried out by the System Integrated Testing team and they are termed as System Integrated Testing. The Testing types that would be carried out as part of System Integrated Testing are listed below.

Security and Access Control Testing Security and Access Control Testing focus on two key areas of security:- Application security, including access to the Data or Business Functions, and System Security, including logging into / remote access to the system. Application security ensures that, based upon the desired security, users are restricted to specific functions or are limited in the data that is available to them. For example, everyone may be permitted to enter data and create new accounts, but only managers can delete them. If there is security at the data level, testing ensures that user “type” one can see all customer information, including financial data, however, user two only sees the demographic data for the same client. System security ensures that only those users granted access to the system are capable of accessing the applications and only through the appropriate gateways.

Failover and Recovery Testing Failover and recovery testing ensures that the application can successfully failover and recover from a variety of hardware, software, and network malfunctions without undue loss of data or data integrity. Failover testing ensures that when a failover condition occurs, the alternate or backup systems “take over” properly. Recovery testing is antagonistic and is meant to induce conditions that will cause application failure in order to invoke recovery procedures, verifying proper application and data recovery. Document how this will be tested or document why if it will not be tested.

Configuration/Compatibility Testing Configuration testing is concerned with checking the program’s compatibility with the many possible configurations of hardware and system software. This testing includes validation of application on different operating systems, Internet browsers, printers, and PC’s.

Performance Test Performance Testing is testing that measures response times, transaction rates, and other time sensitive requirements. The goal of Performance testing is to verify and validate the performance requirements have been achieved. Performance testing is usually executed several times, each using a different “background load” on the system. The initial test should be performed with a “nominal” load, similar to the normal load experienced (or anticipated) on the target system. A second performance test is run using a peak load. Additionally, performance test results can be used to profile and tune a system’s performance as a function of conditions such as workload or hardware configurations.

There are different types of Non-Functional Testing.

Regression Test Regression Test is the execution of group of test cases called Regression Test Suite. Regression Test Suite is prepared by creating the test cases that verify the key functionality of the application. Regression Test Suite contains the test cases that verify the critical business flow. Generally Regression Test Suite are updated (test cases added or removed) after the production implementation of particular version of software. Regression tests are conducted to ensure that component or system specific fixes have no adverse impact on the application as a whole. Regression Test will be at the end of Test Execution to ensure the readiness of the application.

Integration Test Integration Tests are conducted to make sure the individual application components are operating as a whole. The objective of Integration test is to verify that all related configuration items (e.g. new, revised, and changed components) function according to the requirements and design when integrated and tested together in the target environment

Test Automation gains importance due to short delivery timelines and multiple iterations. Since the code is being deployed in multiple iterations, it necessitates the Regression Testing to be carried out to ensure that the newly deployed code does not have any impacts to the existing code and environments. Introduction of Test Automation reduces the testing effort and timelines. The reasons to automate and Common challenges for Automation are depicted in the table below.

| Reasons to Automate | Common Challenges |

|---|---|

| Manual Testing takes too long | Lack of clear goals |

| Huge Time & effort savings | Attitude – why? |

| Reduce error prone Testing | Learning curve to automate |

| Free up testers to perform other tests | Initial investment is high |

| Safety net | Takes longer time to create Automation scripts |

| Provide feedback early and often | Code changes too often |

| Automation provides documentation | Legacy code |

| Reduces cost of Future Testing | Old habits |

System Integrated testing team is responsible for the creation of Automation Regression Test Suites. SIT team works closely with Delivery Testing team to build and maintain the manual Regression Test Suite and Automated Regression Test Suite.

At the end of each sprint, the SIT team revisits the manual regression test suite to update the manual regression test suite to include the test cases written for that particular sprint. The delivery testing team is responsible for the preparation of test cases for that sprint and hand over the test cases to SIT team to be added into Manual Regression Test Suite. Once the Manual Regression Test suite is updated, SIT team updates Automation Test Suite based on Manual Regression Test Suite.

SIT team delivers the Automation Test Suite to Delivery Testing team. Delivery testing team runs the Automation Regression Test Suite before the starting of their sprint testing to validate that the newly deployed code does not have any negative impacts to the existing system.

© 2024 Novature Tech Pvt Ltd. All Rights Reserved.