The rise of AI necessitates a greater emphasis on quality engineering over coding efforts.

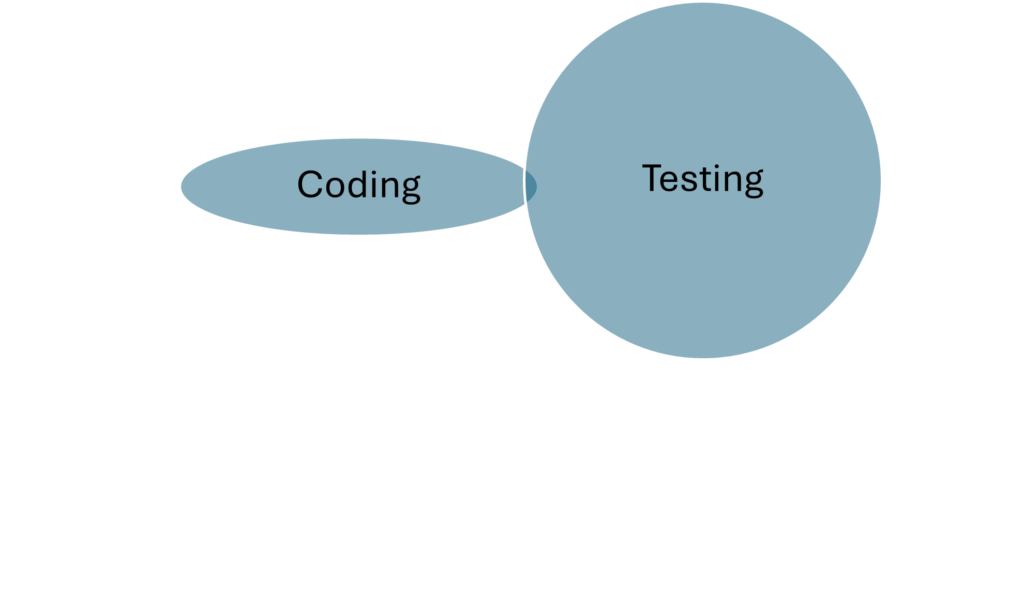

The rapid advancement of AI solutions streamlines the coding process, but concurrently amplifies the demand for rigorous quality engineering, encompassing both functional and non-functional testing. To illustrate, consider a balloon: pressing on one side causes the opposite end to expand, akin to the relationship between coding and testing.

Traditionally, a balanced allocation of 50% of development efforts is allotted to testing. However, with the proliferation of AI-driven coding tools, this paradigm is shifting. The burgeoning influence of AI dictates that testing efforts must at least double coding efforts.

As AI streamlines software design and development tasks, the onus lies on ensuring that the resultant code meets functional requirements and adheres to non-functional aspects such as performance, usability, and security.

Functional Testing assumes paramount importance in validating AI-generated code against diverse business scenarios, necessitating extensive test data management to uphold code quality.

Effective Test Data Management becomes imperative to nourish AI systems with requisite data, often involving the meticulous curation of production data while safeguarding its integrity through data masking and similar techniques.

Performance Testing becomes indispensable as AI-driven applications handle voluminous data, necessitating optimization efforts for server configurations and response times.

Security Testing, encompassing Vulnerability Assessment and Penetration Testing (VA/PT), is critical to fortify AI-developed applications against potential threats, given their accessibility and utilization of common code, as well as the susceptibility of leveraging large-scale production data.

Consequently, while the advent of AI streamlines coding efforts, it accentuates the imperative for robust quality engineering practices.

As we are describing about Testing types and categories, we also need to know AI impact in the test process and test automation and is given as follows

Test Process

Testing AI-based applications requires a tailored approach due to the unique characteristics and challenges associated with AI systems. Here’s an overview of testing processes for AI-based application development:

1. Data Collection and Preparation:

– Gather high-quality, diverse, and representative data sets for training, validation, and testing.

– Preprocess and clean the data to remove noise, outliers, and biases that could adversely affect model performance.

2. Model Training and Evaluation:

– Train machine learning models using appropriate algorithms and techniques.

– Split the data into training, validation, and testing sets.

– Evaluate the model’s performance using metrics such as accuracy, precision, recall, F1-score, and area under the ROC curve (AUC).

3. Unit Testing:

– Test individual components of the AI system, such as data preprocessing, feature extraction, and model training algorithms, in isolation.

– Use mock objects or synthetic data to simulate interactions with external dependencies.

4. Integration Testing:

– Verify the interactions between different components of the AI system.

– Test the integration of AI models with other modules, such as data storage, APIs, and user interfaces.

5. Functional Testing:

– Ensure that the AI system meets functional requirements specified in the project requirements.

– Test various functionalities such as data input/output, model inference, and response to user inputs.

6. Performance Testing:

– Evaluate the performance of the AI system under different workloads, data volumes, and environmental conditions.

– Measure factors such as latency, throughput, resource utilization, and scalability.

7. Robustness Testing:

– Assess the robustness of the AI system by subjecting it to edge cases, outliers, adversarial inputs, and unexpected scenarios.

– Test the system’s ability to handle missing or noisy data, as well as changes in the distribution of input data.

8. Ethical and Bias Testing:

– Evaluate the AI system for potential ethical implications and biases, such as unfair discrimination or lack of fairness across different demographic groups.

– Implement techniques such as fairness testing, bias detection, and algorithmic transparency to mitigate biases and ensure ethical AI.

9. Security Testing:

– Identify and address security vulnerabilities in the AI system, such as data leaks, model inversion attacks, and adversarial attacks.

– Implement measures such as data encryption, access controls, and input validation to protect against security threats.

10. Regression Testing:

– Continuously retest the AI system after code changes, model updates, or infrastructure modifications to ensure that existing functionalities remain intact.

– Automate regression tests to streamline the testing process and maintain test coverage.

11. User Acceptance Testing (UAT):

– Involve end-users or domain experts in testing the AI system to validate its usability, effectiveness, and alignment with user requirements.

– Gather feedback and iterate on the system based on user input.

12. Continuous Monitoring and Feedback:

– Implement mechanisms for monitoring the AI system in production to detect performance degradation, concept drift, or other issues.

– Collect user feedback and usage data to inform future improvements and iterations of the AI system.

By following these testing processes, development teams can ensure the reliability, performance, security, and ethical compliance of AI-based applications throughout the development lifecycle.

Test Automation

AI has significantly impacted test automation, revolutionizing how software testing is conducted. Here’s how:

1. Test Case Generation: AI algorithms can analyze software requirements and automatically generate test cases, saving significant time and effort for testers.

2. Test Data Generation: AI can generate diverse and comprehensive test data sets, covering various scenarios and edge cases, enhancing test coverage and effectiveness.

3. Defect Prediction: Machine learning algorithms can analyze code changes, historical defect data, and other factors to predict areas of the software most likely to contain defects. This helps prioritize testing efforts.

4. Test Execution Optimization: AI-powered test automation tools can intelligently prioritize and optimize test execution based on factors like code changes, risk analysis, and historical test results.

5. Dynamic Test Maintenance: AI algorithms can analyze test results and automatically update test scripts to adapt to changes in the application under test, reducing maintenance overhead.

6. Visual Testing: AI can automate visual testing by analyzing screenshots or UI elements to detect visual inconsistencies across different platforms, screen sizes, and resolutions.

7. Natural Language Processing (NLP) for Testing: NLP techniques enable AI to understand and process natural language test cases, allowing for more efficient test case creation and execution.

8. Self-healing Tests: AI-driven testing frameworks can automatically identify and fix flaky tests or test failures caused by environmental issues, reducing false positives and increasing test reliability.

9. Performance Testing: AI algorithms can simulate real-world user behavior and dynamically adjust load levels to conduct more accurate and efficient performance testing.

10. Predictive Analytics for Testing: AI can analyze various testing metrics and historical data to provide insights into potential future issues, allowing organizations to proactively address them.

Overall, AI in test automation streamlines testing processes, improves test coverage, and enhances the accuracy and efficiency of software testing efforts. However, it’s essential to carefully integrate AI technologies into existing testing workflows and continuously refine them to maximize their benefits.

For comprehensive testing strategies tailored to AI-driven applications, please contact info@novaturetech.com.

Author: admin | Posted On: 7th June 2024 | Category: Article

© 2024 Novature Tech Pvt Ltd. All Rights Reserved.